How Mercury defeats phishing with device verification

Max Tagher is the co-founder and CTO of Mercury.

Mercury provides banking solutions to over 200,000 businesses and consumers. Like major financial institutions, this has made us a target for phishers attempting to impersonate Mercury to steal customers’ funds. In this post, I’ll describe a large-scale phishing attack that was attempted on us in August 2022. The attackers compromised 83 customers who had a total of $5M in deposits across our partner banks. Thanks to the work of our security team, the phishers only stole $300K of that $5M (all of which we reimbursed to our customers). Unfortunately, however, the attackers succeeded in taking millions from similar companies.

This is the story of that attack, and the device verification system we built to shut it down.

The attack

The attackers used this playbook:

- Clone Mercury's website under a lookalike domain, like mercurý.com or mercyru.com.

- Take out Google Ads to drive traffic to the fake site.

- Trick users into entering their passwords and TOTP codes (rotating 6‑digit codes from apps like Google Authenticator or Authy). Use these credentials to impersonate users and log in to Mercury.

- Redirect users to the real Mercury login page to avoid suspicion.

Once the phisher gained access to a customer’s account, they created virtual debit cards and purchased crypto, gold, or jewelry to exfiltrate funds.

Traditional prevention and why it fell short

When the attacks started, we immediately froze the cards of impacted customers and made sure attackers no longer had access to their accounts. Then we kicked off takedown efforts by:

- Requesting registrars take down phishing domains.

- Reporting phishing ads to Google.

- Flagging malicious sites to browsers and government agencies.

But takedown strategies face multiple drawbacks:

- You must first detect the phishing site. Even if you know a customer was phished, it isn’t obvious where from.

- They're slow. We were able to get a phishing site taken down 8 hours after we learned of the attack, but it often took 72 hours.

- Phishers disguise their sites, showing phishing content based on IP address or only to ad‑driven traffic, making them appear legitimate to registrars. This can extend the lifetime of the phishing sites by several days.

We’ve since started using bolster.ai, who helps detect phishing sites and who registrars respond to more quickly (in about 24 hours). But even with this help, you're ultimately playing whack‑a‑mole — new domains and ads are easily created — and you’re one recalcitrant registrar away from a phishing site staying up for days.

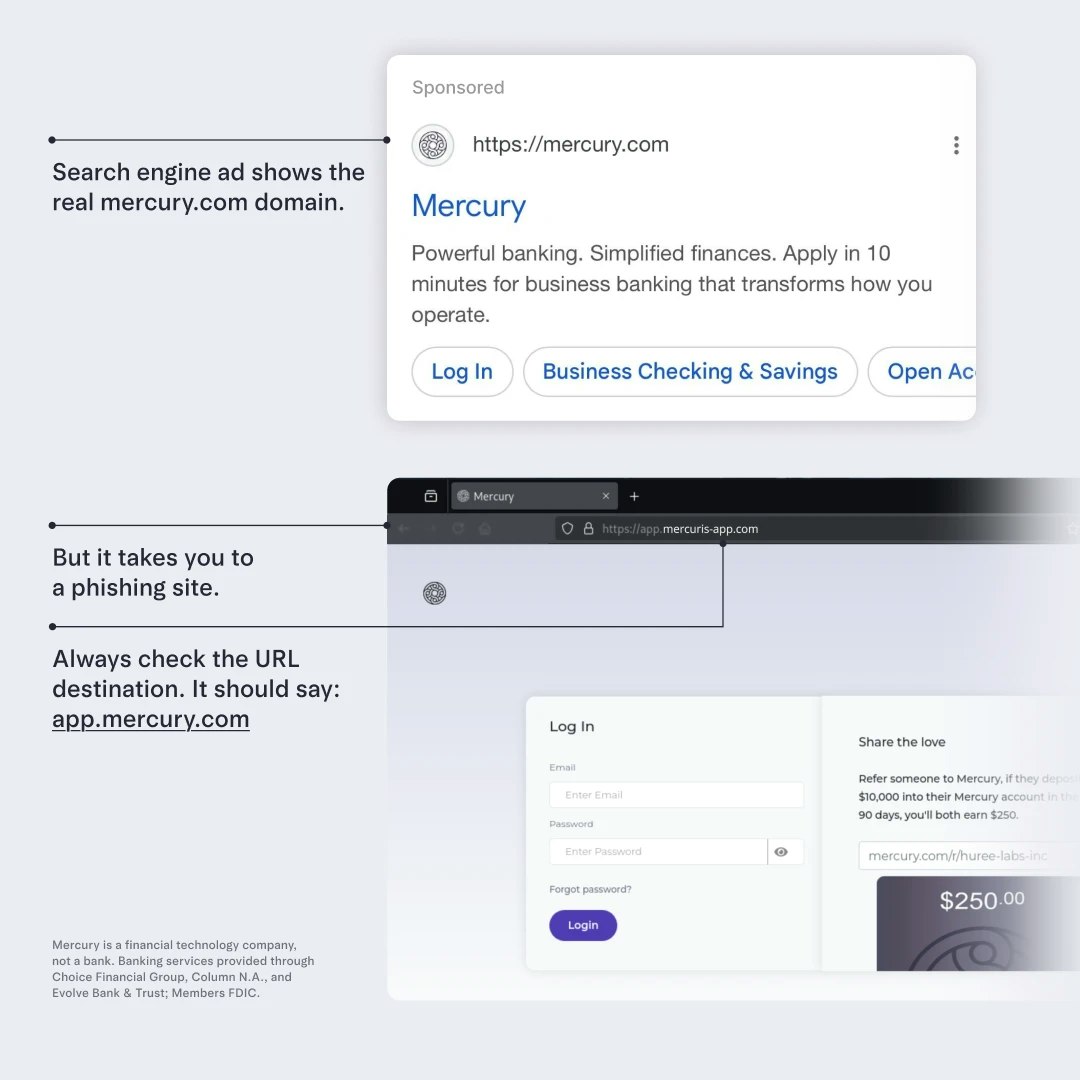

Educating users can help, but subtle typos like mercyru.com are hard to spot. And unlike browsers, Google ads don’t use punycode, which would make a URL like mercurý.com appear as xn--mercur-gza.com and consequently be much easier for a user to catch. Worst of all: Google ads are not required to display the same URL that they direct to. This is intended to allow ads to point to a tracking site that redirects to the real site, but allows attackers to use our real URL on phishing ads:

You can try to detect suspicious behavior, such as a login from a new location, and ask the user to confirm it was them logging in on a mobile app. But this is tricky, because from the user's perspective, they just tried to log in and might mistake the attempt as legitimate. Worse, the attacker knows the user's IP, so they can use a similarly geolocated IP for their login attempt.

Mercury found other markers of suspicious behavior. Sometimes† attackers used unique user agents we could identify. When attackers redirected users to Mercury to cover their tracks, sometimes they would do so with a referrer header that identified a user as having come from the attacker’s site. Once attackers patched these holes, the best signal we found was back-to-back logins, with the first being from a new device. This was a bit noisy, so we used further signals to filter it down, like the new device’s login then being used to reveal the numbers of debit cards.

All of these strategies were effective at mitigating the problem, but they weren’t perfect — even with all of them combined, attackers were creating new phishing sites, and our engineers were waking up at 5 AM to respond to the incident.

Device verification

Fortunately, our security team had anticipated this kind of phishing attack, and had been building a system to defeat attacks just like it. After the attack started, we were able to put the final touches on it and get it deployed within one week. The system we built works like this:

- When a user logs in for the first time, we store a permanent cookie tracking that "device" (really the browser on that device). We also track the IP they logged in from.

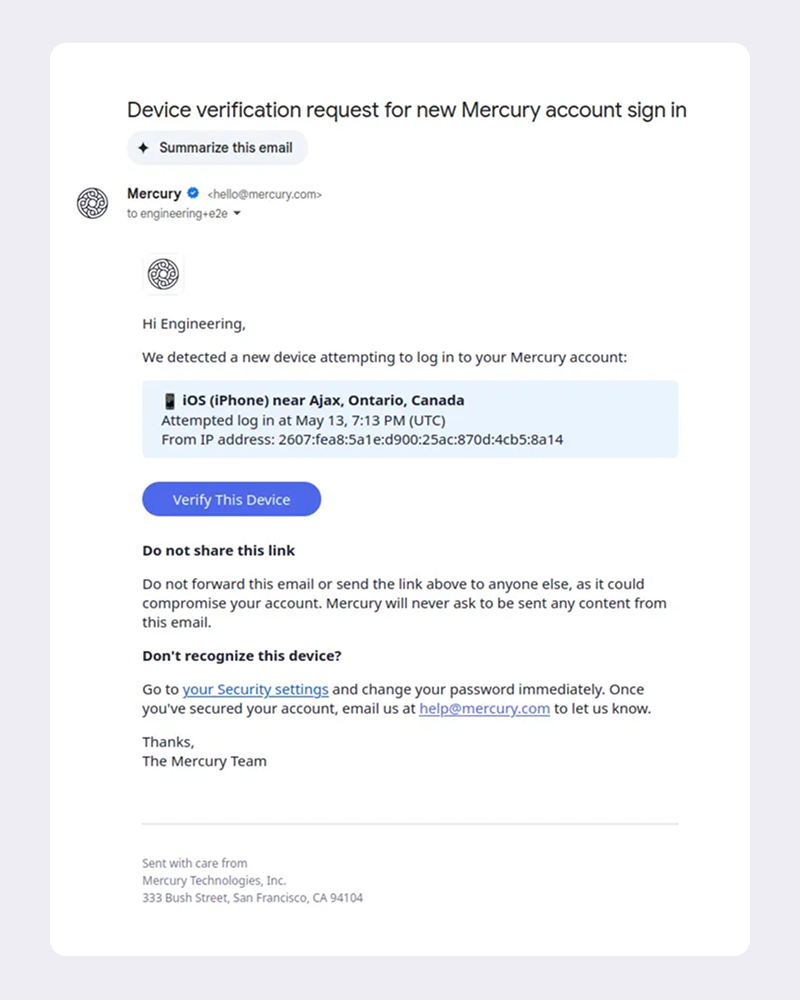

- On subsequent logins, we check if the user is logging in from a new device and IP. If so, we add a final step to the login flow, asking them to open the link in an email we've just sent them to verify their device.

- Once they open this link, we check if the device or IP matches the one that just tried to log in. If so, we add that device and IP address to a set of known good devices/IPs for that user, so on subsequent logins we know it's really them.

The key here is that we don't ask the user to type in a code on our site — that would be something a phisher could replicate. There are two ways a phisher could defeat our system:

- They could instruct the user to forward the email to them. Forwarding an email would be a very unusual step of a login process, so the user is likely to suspect something is wrong, and we instruct the user not to do this in our verification email.

- They could ask the user to copy the URL out of the email. This is again a very unusual login action, and we make it harder by styling the link as a button, making the link single-use, and instructing the user not to share the link.

After deploying device verification, we completely defeated the active phishing attack, and we haven’t seen any successful phishing attacks since. Three years later, we're just starting to see some attempts that ask the user to copy across the URL, but they're very rare: even if it's possible to phish a Mercury customer, the difficulty in doing so makes us an unattractive target compared to crypto or other financial software.

Friction

How much friction did this add to the Mercury login flow? Here are some numbers:

- 65 seconds to verify a new device, on average

- 2% of all logins are subject to device verification

- 50% of users encounter device verification once per year

- 10% encounter it 4 times

- 1% encounter it 11 times

- 0.01% encounter it 31 times. These are privacy conscious users who use VPNs that rotate IPs and have their browser set to clear all cookies when closed. Luckily these same users are likely to use WebAuthn (see below).

This level of friction is probably too much for most sites, but for a banking application most users appreciate the need for security.

One final complication we ran into was iOS devices using iCloud Private Relay, which gives a different IP for requests made from Mobile Safari than from the Mercury App. This means when a user initiates device verification from our App, then opens the link from their email to verify, there would be an IP mismatch. To solve this, we made our device verification URLs deep link directly to the Mercury App.

WebAuthn

The device verification strategy outlined above is effective at preventing phishing on sites with traditional login methods like passwords and TOTP authenticator codes. But a much better method to prevent phishing is WebAuthn, which is the web standard for supporting things like TouchID, FaceID, Yubikeys, or Windows Hello.

When you first authenticate with those methods, they store a secret key specific to the domain you're on (like mercury.com) and they won't use that key for lookalike domains like mercyru.com.

Since WebAuthn is immune to this style of phishing attack, Mercury doesn't ask users to verify their device if they login with WebAuthn.

WebAuthn is more convenient and more secure than rotating authenticator codes (TOTP) for a second level of authentication. In addition to preventing phishing, it's usually locked behind some level of biometric authentication, like your fingerprint or unique facial structure. Mercury prompts users to use WebAuthn, but we've gotten mixed results: 45% of Mac and iOS users enable FaceID or TouchID, but only 10% of Windows users enable Windows Hello (Microsoft's FaceID equivalent).‡

Conclusion

Once the phishing campaign against our users started, we immediately deployed traditional countermeasures like registrar and Google ads takedowns. And thanks to the foresight of our security team, we were able to quickly deploy the more fool-proof device verification approach. For the 83 customers that were successfully phished, Mercury fully reimbursed their stolen deposits.

In the three years since this phishing attack, we haven't seen a comparable attack prove successful — and we believe it’s our device verification approach that is largely to thank. If having this level of security for your banking interests you, I recommend signing up or checking out our demo.

†The variation here suggests a decentralized attack—probably from one person sharing the success of an initial exploit on a dark web forum.

‡ These results are from 2023. After the test, we stopped prompting Windows users because adoption was so low.

Special thanks to Sebastian Bensusan, who had a key insight that significantly improved this post. Also thanks to Micah Fivecoate, Sam Mortenson, and Branden Wagner who reviewed this post, helped get stats on it, and responded to the attack and implemented the original device verification system.

About the author

Max Tagher is the co-founder and CTO of Mercury.

Related reads